Table of contents:

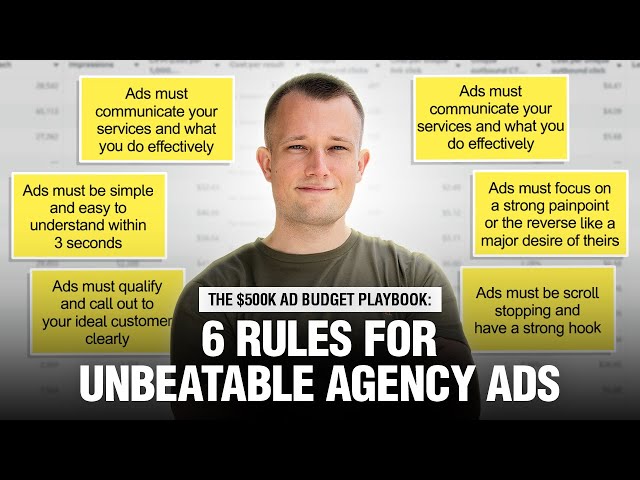

The A/B testing process

The reams of data now available to digital marketers and strategists allow us to identify opportunities for optimisation, test theories and learn on an ongoing basis. Although our key focus at PurpleFire is optimising businesses digital offerings, the theories we use day to day to run successful optimisation programmes, can just as effectively be applied to offline channels.

When we talk about online optimisation at PurpleFire we are mainly talking about an A/B testing process. The theory behind conducting A/B tests is essential to validate a hypothesis, taking away the guess work by allowing you to test your theory against the current state. The same methods can be applied to processes that take place offline. Multi-channel retailers are likely to have a website that they optimise, but why not replicate experimentation to print catalogues and advertisements, in-store experiences and call centres. Each customer or potential customer touch point is an opportunity to test something new, gather data and learn about your customers.

Just as a visitor to your website is an opportunity to sell products, visits to your stores are too, however rather than interacting with your website, visitors to your store will be interacting with your space, your product and your staff. This presents a number of new opportunities to use techniques to increase sales. For example, finding out if you can increase shoppers’ average purchase value by placing certain items at the checkout with a prompt from the checkout staff. This could be done by conducting an experiment where half of your checkout staff ask the customer if they are interested in purchasing an additional item and pointing the items out while the other group do not. The average purchase value per employee could then be reviewed to assess if customers react better to being persuaded to purchase something or prefer being left to make their own decisions.

The optimisation isn’t necessarily about increasing sales, it’s really about increasing ROI and that can be done in other ways than just getting more people to buy more stuff. Taking call centre costs, and optimising the processes involved in answering queries and resolving issues over the phone with the aim of reducing the amount of resources needed in your call centre, will have a positive impact on your businesses ROI. Testing the impact of implementing a script for your call centre employees to follow would allow you to assess the most effective approach. Using scripts may help employees to answer more calls per day, increasing the effectiveness of the call centre.

However, the reduced personality may have a negative effect on the customer’s feedback about the call and their overall perception of the company. This experiment could be conducted using the A/B testing theory by randomly allocating each call centre employee a group; A (unscripted) or B (scripted). Each call received by each individual employee will then follow their allocated format.

Photo by plantronicsgermany, Flickr

Photo by plantronicsgermany, Flickr

The key thing to get right with both on and offline optimisation is measurement. Defining what success looks like for offline optimisation efforts could be trickier than when analysing online experiments using tools such as Google Analytics.

Take the call centre example, how do we go about measuring the effectiveness of the two methods to assess the best option for your business? Measuring the number of calls answered in a day in group A and group B would allow you to assess which of the methods was most effective. Depending on the sophistication of your systems this data may be something that can be pulled off relatively easily per employee, the numbers for each could then be combined to reach the final call numbers for each group.

But how do we measure how the method used affected the customer experience and their perception of the company? Conducting customer satisfaction research by using a post call satisfaction survey will provide insights about the method used by the employee to answer the customers query. Asking customers at the start of the call if they would be happy to take part in the survey once their query has been dealt with should help to gather enough responses to ensure the results are statistically significant.

Just like online satisfaction surveys, phone surveys should be kept short and sweet. It is also important to consider how you are going to use the data when constructing your questions. Asking customers to rate their experience on a scale 1-10 will provide you with quantitative data that you can easily benchmark against the data for the other group. Asking open questions will help you understand the specific reasons for a customer’s positive or negative experience. However, analysis of this qualitative data could be open to interpretation and would be more effectively used to highlight opportunities for further improvement within each method than assessing the impact of the experiment.

A final consideration is that the nature of offline optimisation means that your employees play an integral part in conducting the experiments as more often than not the experiments will have an effect on how they go about their day-to-day jobs. The first step in ensuring success in replicating traditional ab testing processes offline is explaining to your staff, not only what they will be required to do, but also why. Rather than explaining what they have to do differently, getting them on board with optimisation by providing them with some training or getting them involved in a workshop early on will ensure they are on board with your plans. Gathering some of their ideas for improvement and optimisation will also help to make them feel included and since these are the people dealing with customers on a day-to-day basis, their ideas are likely to be gold dust.

Three key points to follow when conducting an offline A/B testing process

- Take measures to ensure the control and variation are maintained consistently throughout the experiment, since offline optimisation is more reliant on your team, human error and interpretation could come into play and could affect the validity of your efforts.

- Plan how you are going to measure the experiment early on and put in place any necessary new procedures to ensure you have good-quality data at the end of the experiment.

- Just as with online optimisation, we would recommend ensuring any experiment runs for at least two business cycles, reaches at least 300 conversions in each group and most importantly, achieves statistical significance.